I'm a proud dog mom, Maker, data nerd, and self-taught engineer. I created bitsy.ai to share my research and development process for real-world ML applications targeting small devices.

I started studying data science, machine learning, and statistics in 2014, roughly 6 years into my career (which was a grab bag of web apps, systems administration, video game development, and marketing-focused data analytics).

I still remember my first wow moment: scraping Google's shopping API to predict the resale price of niche products with linear regression. The exercise was from Machine Learning in Action by Pete Harrington (chapter 8, "Forecasting the price of LEGO sets").

Besides awed at this witchcraft 🔮, I remember feeling frustrated and bewildered when I read:

"Regression can be done on any set of data provided that for an input matrix X, you can compute the inverse of XTX."

I must have Googled dozens of ridiculous-sounding queries like:

- "XtX math notation"

- "tiny math t"

- "variable superscript matrix"

- "small t math notation"

- "inverse matrix notation" (nope, that one is A^_ 😓)

Authors of machine learning papers (quite reasonably) assumed I had a basic understanding of linear algebra, calculus, and stats/probability. Even the introductory texts were written for and by graduate and PhD students, professors, and industry alums.

I felt like an outsider, trying to decipher a foreign language.

I needed a compelling way to study advanced math, algorithms and many other fundamentals that I side-stepped in 2008, when I dropped out of college to grow my portfolio of small app/plugin businesses.

Luckily, massive open online courses (MOOCs) like edX and MITx launched within a few years. These platforms provided access to the undergrad material required to make sense of state-of-the-art Machine Learning publications.

To stay motivated through the self-paced courses, I connected theory with an application. For example, when studying calculus I built a proportional-integral-derivative (PID) controller to actuate servo motor controls. I needed to tinker with each term in the algorithm and see real-world results to grok abstract concepts like dampening ratio (the derivative-based term).

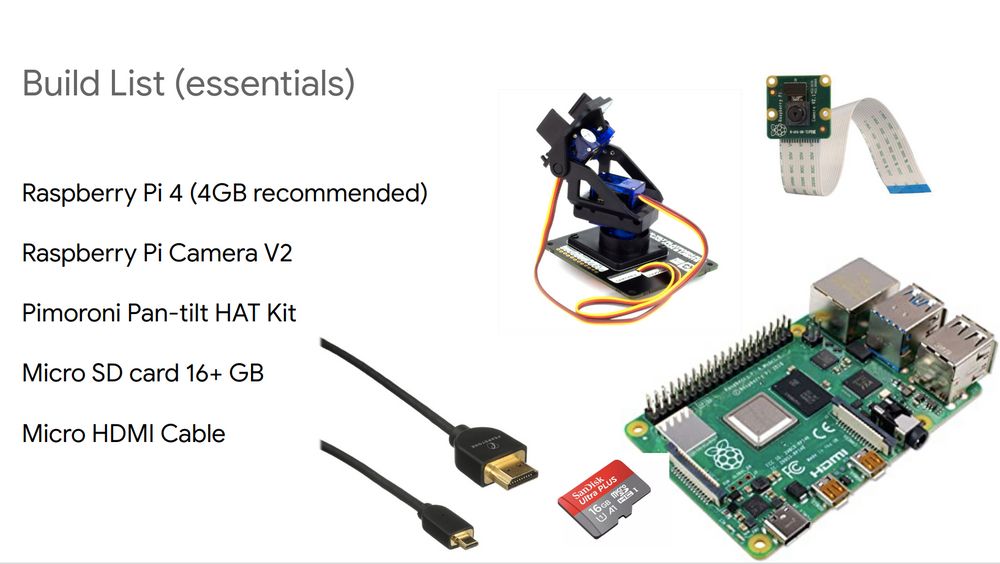

I built a real-time object tracker with @Raspberry_Pi @TensorFlow #TFLite, @pimoroni Pan-Tilt HAT, #EdgeTPU #MobileNetV3 architecture (so fresh 🔥)

— Leigh (@grepLeigh) December 9, 2019

Today I:

✅Released the code (pip install rpi-deep-pantilt)

✅Wrote a step-by-step guide

✨Enjoy✨https://t.co/CdNNwS5P7Y https://t.co/ydABxMIvi5 pic.twitter.com/qoOgfNwarN

My projects tended to be hands-on, low-budget, and fueled by my obsession with Raspberry Pis. I manage my Pi projects like immutable server infrastructure (with Ansible), otherwise they'd be overwhelming to maintain.

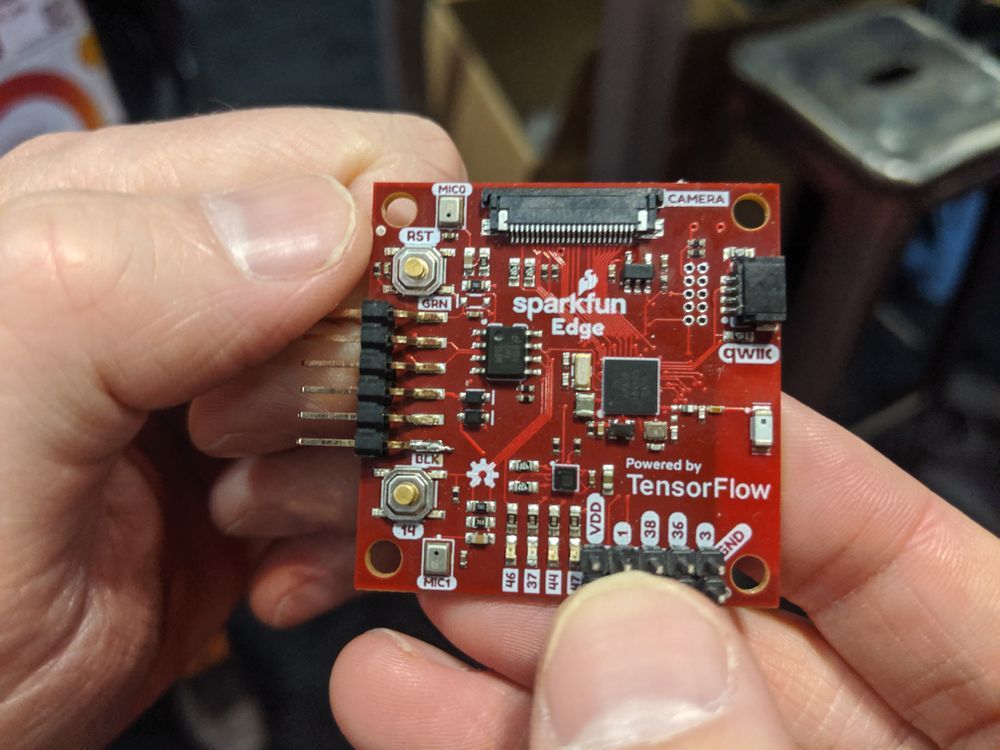

Adapting state-of-the-art research for Raspberry Pi forced me to learn how Machine Learning frameworks worked beneath their user-facing APIs. I often found myself at the bleeding edge of tiny machine learning. TinyML is a nascent field at the intersection of applied machine learning and internet-of-things technologies. These embedded devices are constrained by performance, space, specialized function, and power.

I believe the next significant AI/ML innovations will be born from the constraints of embedded systems, and I'm excited to be here for it!